Riptides delivers Vault/OpenBao sourced credentials to AI and Cloud APIs without user-space secrets

In the previous post, Secure OpenAI API Key delivery with Riptides, we demonstrated how Riptides securely delivers short-lived OpenAI API keys sourced from Vault/OpenBao to AI agents using kernel-enforced sysfs files. That approach already eliminates static secrets, reduces blast radius, and removes the need for Vault clients or tokens in workloads.

This model provides several key advantages:

- A dramatically reduced blast radius compared to static keys

- A clean separation between identity, access policy, and application logic

- Strong isolation between co-located workloads, enforced at the Linux kernel level

- A familiar consumption model for developers (read a credential, use it)

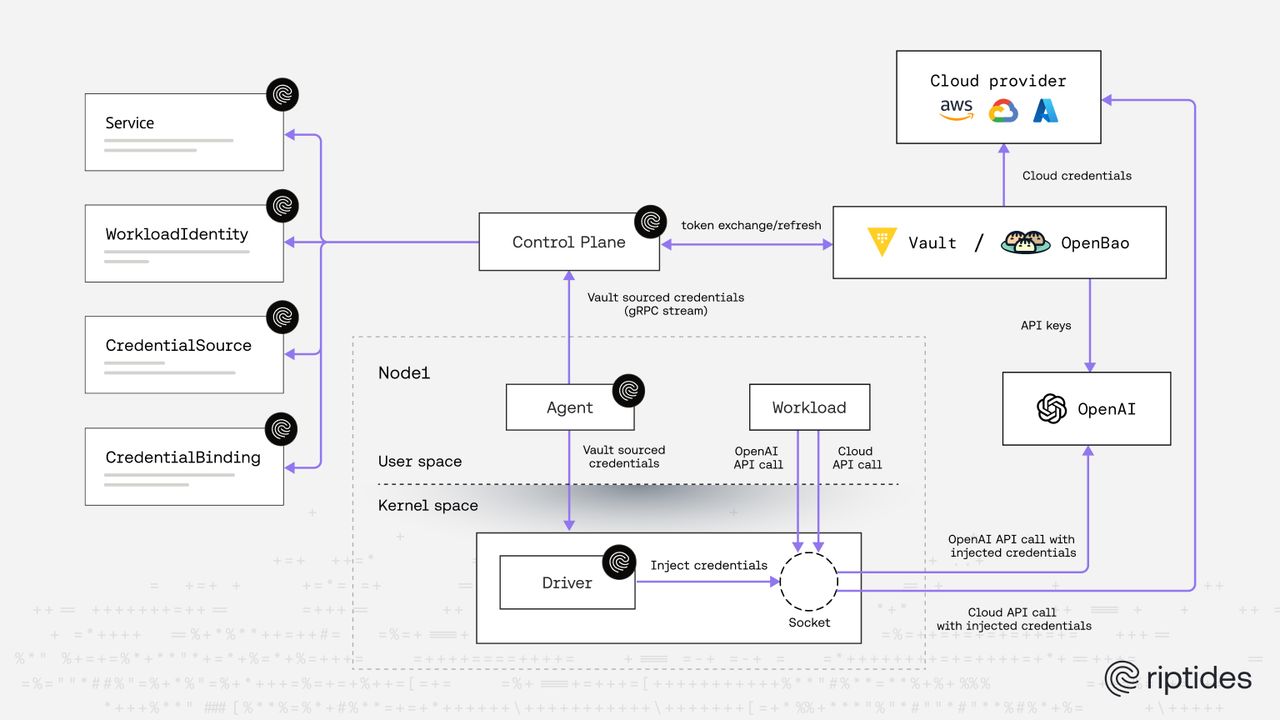

This post goes further. Instead of delivering credentials to workloads, Riptides injects Vault-sourced credentials directly into outbound requests in kernel space at the moment they are sent. API keys and cloud credentials never appear in the application user space; not in files nor in configuration.

By combining Vault/OpenBao as the system of record for short-lived credentials with on-the-wire injection model of Riptides, organizations can enforce identity-based access to OpenAI and cloud APIs with zero secret handling in workloads, while preserving compatibility with existing applications.

Existing Riptides capability: secretless cloud credential injection

Before introducing Vault, Riptides already supported on-the-wire injection of temporary cloud credentials that it provisions itself, based on workload identity.

These credentials are:

- Short-lived

- Issued on demand

- Never stored or managed by developers or operators

You can learn more about this capability in these posts:

- OCI authentication using SPIFFE-based workload identity

- On-demand credentials for AI assistants on GCP

- On-the-wire injection for secretless AWS Bedrock access

In these scenarios, Riptides itself provisions temporary cloud credentials and injects them directly into outbound requests at write time, in kernel space.

- No secrets.

- No SDK changes.

- No sidecars.

Why Vault / OpenBao changes the equation

The injection of Vault/OpenBao-sourced credentials does not replace existing cloud credential injection Riptides capability; it complements it.

Many organizations already rely on Vault or OpenBao as their central system for issuing:

- Short-lived cloud provider credentials

- Database credentials (both static and dynamic)

- API keys for internal and external services

For these teams, replacing Vault is neither desirable nor realistic. This is where the approach presented in this post comes in.

By integrating Vault/OpenBao with Riptides via tokenex, organizations can now:

- Keep Vault/OpenBao as the credential authority

- Continue using existing Vault policies, TTLs, and audit logs

- Eliminate secret distribution and handling on the infrastructure where workloads run

- Apply the same on-the-wire injection model to credentials sourced from Vault

In other words, if you already use Vault to issue credentials, you can now consume those credentials without ever exposing them to workloads.

From secure delivery to full elimination of secrets in workloads

The sysfs-based approach presented previously already offers strong guarantees. But it still involves materializing credentials in user space, even if briefly and under kernel control. On-the-wire injection takes the final step.

With this model:

- Credentials are fetched from Vault/OpenBao

- Authentication to Vault/OpenBao is JWT-based and tokenless

- Credentials are injected directly into outbound requests in kernel space on demand

- The workload never sees, stores, or processes the credential

We refer to this as on-the-write credential injection.

How it works at a high level

-

A workload initiates an outbound request

For example:- An AI agent calling OpenAI

- A service accessing AWS APIs

-

Riptides intercepts the request in kernel space

No application changes. No SDK wrapping. -

Credentials are requested from Vault/OpenBao

- No Vault tokens are stored or distributed

- Vault policies remain fully in control

-

Credentials are injected into the request at write time

- OpenAI API keys are added to HTTP headers

- Cloud credentials are injected in the appropriate protocol-specific form

-

The request leaves the node authenticated

The workload itself remains entirely unaware of the credential.

What we’ll show next

In the remainder of this post, we’ll demonstrate how:

- OpenAI API keys issued by Vault/OpenBao can be injected on the wire using Riptides

- AWS credentials sourced from Vault/OpenBao can be injected into cloud API requests using Riptides

Prerequisites

This guide assumes:

- Vault or OpenBao is already running

- JWT authentication is configured in Vault/OpenBao to trust the Riptides control plane as an OIDC issuer

- The OpenAI secrets engine plugin is enabled and configured

- The AWS secrets engine plugin is enabled and configured

- Roles for generating OpenAI API keys and AWS credentials are already defined

On-the-wire injection of OpenAI API keys sourced from Vault/OpenBao

Define the external OpenAI service in Riptides

apiVersion: core.riptides.io/v1alpha1

kind: Service

metadata:

name: openai-api

namespace: riptides-system

spec:

addresses:

- address: api.openai.com

port: 443

labels:

app: openai-api

external: trueThis Riptides Service object declares OpenAI as an external dependency. It enables Riptides to apply identity-aware egress policies when workloads communicate with the OpenAI API.

Configure Vault/OpenBao as a credential source

apiVersion: core.riptides.io/v1alpha1

kind: CredentialSource

metadata:

name: vault-openai-apikeys

namespace: riptides-system

spec:

vault:

address: http://localhost:8200

jwtAuthMethodPath: jwt

role: <JWT-AUTH-ROLE>

path: openai/creds/my-role

audience: ["vault"]

type:

token:

source: api_keyThis CredentialSource tells Riptides:

- Which Vault/OpenBao instance to connect to

- Which JWT authentication role to use

- Which secret path to read

- That the credential should be treated as a bearer token

The OpenAI API key behaves as a bearer token and must be sent in the HTTP request header as:

Authorization: Bearer <API_KEY>By setting the credential type to token, Riptides activates its built-in bearer token injection mechanism. The source field specifies which attribute in the Vault/OpenBao response contains the token value.

Riptides exchanges a workload identity JWT for a short-lived OpenAI API key, without requiring Vault tokens or SDKs inside the workload.

Define the workload identity for the AI agent

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: ai-agent

namespace: riptides-system

spec:

scope:

agent:

id: <AGENT_WORKLOAD_ID>

workloadID: ai-agent

selectors:

- process:name: <AI-AGENT-PROCESS-NAME>This object binds a SPIFFE-based workload identity to a specific process. Only the matching process is treated as the AI agent and is allowed to:

- Authenticate to Vault/OpenBao

- Receive OpenAI API keys

- Use those credentials when calling the OpenAI API

Bind the credential source to the workload

apiVersion: core.riptides.io/v1alpha1

kind: CredentialBinding

metadata:

name: vault-openai-apikeys-binding

namespace: riptides-system

spec:

workloadID: ai-agent

credentialSource: vault-openai-apikeys

propagation:

injection:

selectors:

- app: openai-apiThis CredentialBinding connects the AI agent identity with the Vault credential source and defines how the credential is applied. In this case, Riptides injects the OpenAI API key directly into outbound requests sent to the OpenAI API.

On-the-wire injection of AWS credentials sourced from Vault/OpenBao

Define the external AWS service in Riptides

apiVersion: core.riptides.io/v1alpha1

kind: Service

metadata:

name: aws-api

namespace: riptides-system

spec:

addresses:

- address: *.amazonaws.com

port: 443

labels:

app: aws-api

external: trueThis Service object declares the AWS API as an external dependency, allowing Riptides to apply identity-aware egress controls to AWS API calls.

Configure Vault/OpenBao as an AWS credential source

apiVersion: core.riptides.io/v1alpha1

kind: CredentialSource

metadata:

name: vault-aws-creds

namespace: riptides-system

spec:

vault:

address: http://localhost:8200

jwtAuthMethodPath: jwt

role: <JWT-AUTH-ROLE>

path: aws/creds/my-role

audience: ["vault"]

type:

aws: {}By setting the credential type to aws, Riptides activates its built-in AWS credential injection mechanism. Under the hood, this uses the

libsigv4 library to sign AWS API requests in kernel space.

Define the workload identity for the AWS client

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: aws-cli

namespace: riptides-system

spec:

scope:

agent:

id: <AGENT_WORKLOAD_ID>

workloadID: aws-cli

selectors:

- process:name: awsThis workload identity applies specifically to the AWS CLI process.

Bind the AWS credential source to the workload

apiVersion: core.riptides.io/v1alpha1

kind: CredentialBinding

metadata:

name: vault-aws-creds-binding

namespace: riptides-system

spec:

workloadID: aws-cli

credentialSource: vault-aws-creds

propagation:

injection:

selectors:

- app: aws-apiWith this binding in place, Riptides injects Vault-issued AWS credentials directly into outbound AWS API requests without exposing credentials to user space, environment variables, or configuration files.

Key takeaways

Riptides supports two complementary models for secretless access:

- Riptides-provisioned temporary credentials injected on the wire

Ideal when you want Riptides to directly issue and inject cloud credentials. - Vault/OpenBao-sourced credentials injected on the wire

Ideal when Vault is already your source of truth for cloud, database, or API credentials.

Both approaches share the same core properties:

- No long-lived secrets

- Kernel-level enforcement

- Strong workload isolation

- Minimal operational overhead

- Clear auditability and policy control

On-the-wire injection of credentials represents the most secure end state: credentials never exist in workload user space at all.

And when that model isn’t feasible for business or technical reasons, the sysfs-based delivery approach remains available, still far more secure than traditional methods, and fully managed by Riptides at the kernel level.

Together, these options give organizations a practical, incremental path toward eliminating secrets from modern AI and cloud-native systems.

With these takeaways in mind, the next question is how this model fits into your existing security architecture.

Who should use which model?

Both credential injection models serve different organizational needs and maturity levels.

Use Riptides provisioned temporary credentials if:

- You want the simplest path to secretless access for cloud and AI APIs

- You are not already standardized on Vault/OpenBao

- You prefer Riptides to handle the credential lifecycle end-to-end

- You want an immediate reduction in secret sprawl with minimal platform changes

This model is ideal for teams adopting secretless infrastructure for the first time or for greenfield platforms.

Use Vault/OpenBao-sourced credentials if:

- Vault/OpenBao is already your system of record for credentials

- You issue short-lived cloud, database, or API credentials via Vault/OpenBao today

- You want to preserve existing policies, audit trails, and governance controls

- You need to consume credentials that Riptides cannot provision natively, but that Vault/OpenBao supports via a secrets engine plugin

This model is ideal for enterprises with mature security programs that want to eliminate secret handling at runtime without disrupting existing Vault/OpenBao workflows.

Both approaches share the same core guarantees: identity-based access, short-lived credentials, kernel-level enforcement, and zero secret distribution to developers or operators.

If you enjoyed this post, follow us on LinkedIn and X for more updates. If you'd like to see Riptides in action, get in touch with us for a demo.