The growing challenge of credential management for AI agents

We are entering the era of agentic AI, where AI agents are no longer experimental tools or isolated assistants. They are becoming core components of modern software systems, executing tasks, making decisions, and interacting with external services on behalf of users and organizations. As adoption accelerates, enterprises are rapidly embedding AI agents into business-critical workflows.

Under the hood, most of these agents rely on GenAI platform APIs, with OpenAI being the most widely used provider today. To access these APIs, AI agents authenticate using API keys. This same pattern applies to other major providers such as Mistral and Anthropic Claude, and is likely to remain common across the broader GenAI ecosystem.

The core problem: long-lived static API keys

OpenAI API keys are:

- Long-lived

- Static

- Highly sensitive

This model was acceptable when only a small number of centrally managed applications accessed AI APIs. It breaks down in an environment where large numbers of AI agents are deployed dynamically across clusters, regions, and teams.

Long-lived static API keys introduce several systemic issues:

- Security risk: a leaked key grants broad access until manually revoked

- Operational burden: rotation, revocation, and distribution must be managed continuously

- Compliance challenges: static credentials conflict with zero-trust, least-privilege, and modern audit requirements

In practice, these keys often end up embedded in configuration files, CI pipelines, container images, or environment variables, significantly increasing the blast radius of any compromise.

Vault and OpenBao help — but not enough

HashiCorp Vault and OpenBao address part of this problem by supporting dynamic OpenAI API keys through a secrets engine. With this approach:

- API keys are generated on demand

- Keys are short-lived

- Vault/OpenBao automatically revokes keys upon expiration

This is a major improvement over static secrets. Credentials are no longer perpetual, and the window of exposure is dramatically reduced.

However, this does not fully solve the problem.

Even temporary OpenAI API keys must still be:

- Securely delivered to the AI agent

- Protected from other workloads running on the same infrastructure

- Refreshed seamlessly as they expire

To achieve this, developers typically need to:

- Integrate Vault/OpenBao SDKs into every AI agent

- Provision credentials that allow agents to authenticate to Vault/OpenBao

- Implement renewal, retry, and error-handling logic

As the number of agents grows, this approach becomes increasingly complex and fragile.

According to Gartner: Gartner predicts that 40% of enterprise applications will feature task-specific AI agents by 2026, up from less than 5% in 2025.

At this scale, secret distribution and credential management are no longer application-level concerns — they become platform-level security challenges.

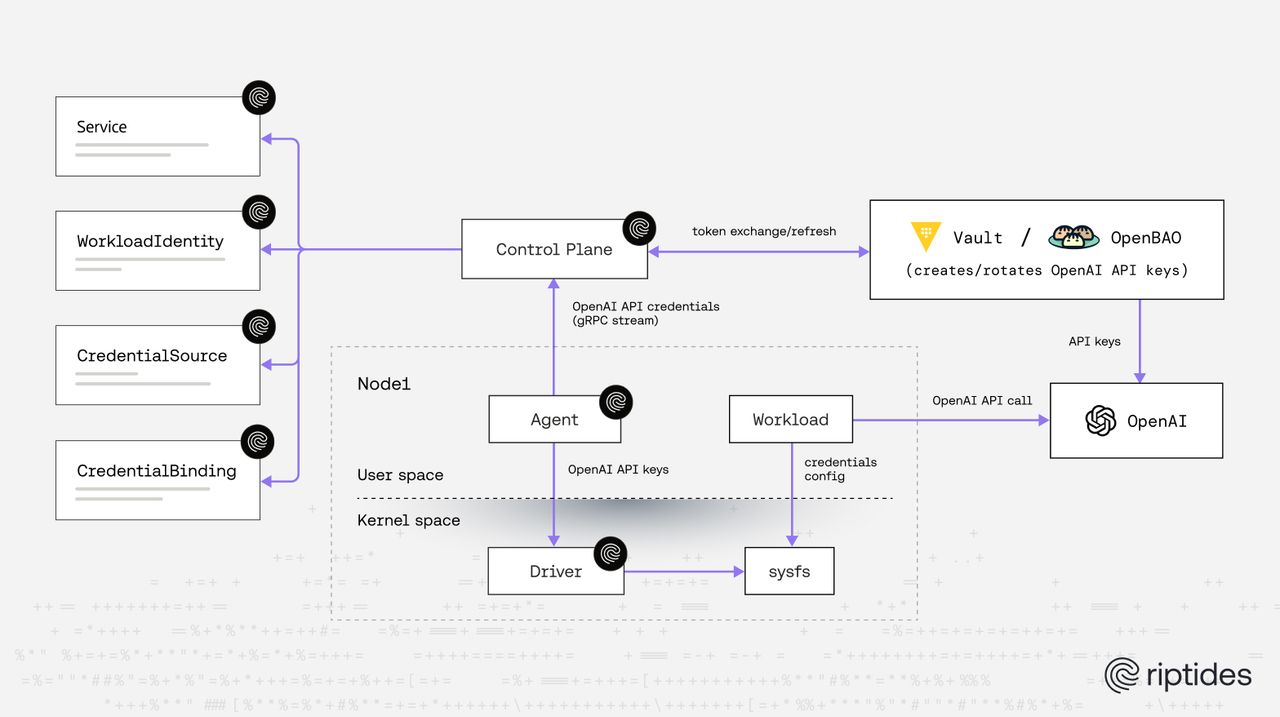

How Riptides solves this

Riptides addresses this challenge by starting from a different premise: identity must be the root of trust.

Identity first, secrets second

With Riptides:

- Every workload runs with a verifiable, SPIFFE-based workload identity

- Identity is enforced at runtime, not inferred from configuration

- Access decisions are based on who the workload is, not what secrets it happens to possess

Exchanging identity for OpenAI API keys

Riptides relies on tokenex to access Vault and OpenBao secrets through their native JWT authentication mechanisms, exchanging workload identity tokens for secrets without issuing or storing any Vault/OpenBao tokens.

Using tokenex, Riptides:

- Issues ID tokens (JWTs) to verified workloads

- Encodes the workload’s SPIFFE identity into those tokens

- Exchanges the ID token for a short-lived OpenAI API key via Vault or OpenBao

This ensures that:

- Only authenticated and authorized workloads can obtain OpenAI credentials

- OpenAI API keys are temporary by default

- No static OpenAI keys are embedded in application code, configuration, or images

Secure delivery enforced by the kernel

Riptides retrieves short-lived OpenAI API keys from Vault/OpenBao on demand and places them into a dedicated sysfs file provided by the Riptides Linux kernel module, ensuring that read access is enforced at the kernel level.

Key properties of this approach:

- Access to the sysfs file is enforced in the Linux kernel, not by application logic

- Only the workload bound to the expected SPIFFE identity is authorized to read the file’s contents

- Other processes on the same node, even if co-located, are prevented from accessing the secret

From the AI agent’s perspective, consumption is trivial:

- The API key is read via a simple file operation

- No Vault/OpenBao SDK is required

- No custom secret refresh logic is needed

This makes secret consumption as simple as a file read, while ensuring that access control is stronger than environment variables or in-process memory, and cannot be bypassed by misconfiguration or code changes.

What this enables

By combining verifiable workload identity, short-lived credentials, and kernel-level enforcement, Riptides provides:

- Just-in-time issuance and delivery of short-lived OpenAI API keys, reducing credential exposure windows

- Zero long-lived secrets on the host: neither developers nor operators ever provision, store, or rotate API keys on the infrastructure where AI agents run

- Strong, kernel-enforced isolation between co-located workloads, limiting blast radius and preventing lateral access to credentials

- Clear separation of identity, access policy, and application logic, enabling consistent enforcement and auditable controls

Together, these properties align with least privilege, zero trust, and defense-in-depth principles, while simplifying compliance with security frameworks, and internal audit requirements.

Setting this up with Riptides

This section walks through how to configure Vault/OpenBao and Riptides so that AI agents can securely obtain short‑lived OpenAI API keys without embedding Vault clients, handling Vault tokens, or managing secrets directly in application code.

The configuration is presented step by step, with each snippet accompanied by an explanation of what it does, why it is needed, and how it fits into the overall flow.

Prerequisites

This guide assumes:

- Vault or OpenBao is already running

- JWT authentication is configured in Vault/OpenBao to trust the Riptides Control Plane as an OIDC issuer

- The OpenAI secrets engine plugin is installed

We do not cover JWT auth configuration in detail here. A complete example can be found in this post

For instructions on installing the OpenAI secrets engine plugin, see:

https://github.com/gitrgoliveira/vault-plugin-secrets-openai

Note

In all examples below, if you are using HashiCorp Vault instead of OpenBao, replace thebaoCLI withvault.

Enable the OpenAI secrets engine

bao secrets enable openaiThis command enables the OpenAI secrets engine at the openai/ path. Once enabled, Vault/OpenBao can dynamically generate OpenAI API keys instead of relying on static, long‑lived credentials.

Configure the OpenAI secrets engine

bao write openai/config \

admin_api_key="$OPENAI_ADMIN_KEY" \

admin_api_key_id="$OPENAI_ADMIN_KEY_ID" \

organization_id="$OPENAI_ORG_ID"Here you configure the secrets engine with an administrative OpenAI API key. This key is used only by Vault/OpenBao to create and revoke project‑scoped, short‑lived API keys on behalf of workloads.

This administrative key never leaves Vault/OpenBao and is not exposed to AI agents.

Create a role for generating OpenAI API keys

bao write openai/roles/my-role \

project_id="$OPENAI_PROJ_ID" \

service_account_name_template="vault-{{.RoleName}}-{{.RandomSuffix}}" \

ttl=15m \

max_ttl=1hThis role defines how Vault/OpenBao generates OpenAI API keys:

- Which OpenAI project the keys belong to

- How the backing OpenAI service account is named

- The default lifetime (

ttl) and maximum lifetime (max_ttl) of issued keys

Every API key generated through this role is short‑lived by design, limiting blast radius and exposure.

Create a policy allowing access to generated API keys

bao policy write read-openai-apikeys -<<EOF

path "openai/creds/my-role" {

capabilities = ["read"]

}

EOFThis policy allows a client to read credentials generated by the my-role OpenAI role. It does not grant administrative privileges or access to other secrets.

In the next steps, this policy will be bound to a specific workload identity.

Configure JWT authentication for the AI agent identity

bao write auth/jwt/role/openai-apikeys \

user_claim="sub" \

bound_audiences="vault" \

bound_subject="<AI_AGENT_WORKLOAD_SPIFFE_ID>" \

token_policies="read-openai-apikeys" \

token_type="service" \

token_num_uses=2 \

role_type="jwt"This configuration tells Vault/OpenBao which identity is allowed to authenticate using JWTs:

- Authentication is bound to a specific SPIFFE workload identity (

bound_subject) - The JWT must be issued for the expected audience (

vault) - The resulting Vault token is short‑lived and limited to reading OpenAI API keys

No Vault token is stored or managed by the AI agent. Authentication is entirely JWT‑based and secretless.

Define the external OpenAI service in Riptides

apiVersion: core.riptides.io/v1alpha1

kind: Service

metadata:

name: openai-api

namespace: riptides-system

spec:

addresses:

- address: api.openai.com

port: 443

labels:

app: openai-api

external: trueThis Riptides Service object declares OpenAI as an external dependency. It allows Riptides to apply identity‑aware egress policies when workloads communicate with the OpenAI API.

Configure Vault as a credential source

apiVersion: core.riptides.io/v1alpha1

kind: CredentialSource

metadata:

name: vault-openai-apikeys

namespace: riptides-system

spec:

vault:

address: http://localhost:8200

jwtAuthMethodPath: jwt

role: <JWT-AUTH-ROLE>

path: openai/creds/my-role

audience: ["vault"]This CredentialSource tells Riptides:

- Which Vault/OpenBao instance to contact

- Which JWT auth role to use

- Which secret path to read

Riptides will exchange a workload identity JWT for a short‑lived OpenAI API key, without requiring Vault tokens or SDKs in the workload.

Define the workload identity for the AI agent

apiVersion: core.riptides.io/v1alpha1

kind: WorkloadIdentity

metadata:

name: ai-agent

namespace: riptides-system

spec:

scope:

agent:

id: <AGENT_WORKLOAD_ID>

workloadID: ai-agent

selectors:

- process:name: [<AI-AGENT-PROCESS-NAME>]This object binds a SPIFFE-based workload identity to a specific process. Only the matching process is treated as the AI agent and is allowed to:

- Authenticate to Vault/OpenBao

- Receive OpenAI API keys

- Use those credentials when calling the OpenAI API

Bind the credential source to the workload

apiVersion: core.riptides.io/v1alpha1

kind: CredentialBinding

metadata:

name: vault-openai-apikeys-binding

namespace: riptides-system

spec:

workloadID: ai-agent

credentialSource: vault-openai-apikeys

propagation:

sysfs: {}This CredentialBinding connects the AI agent identity with the Vault credential source and specifies how the credential is delivered.

By selecting sysfs, Riptides exposes the secret through a our Linux kernel module managed file.

Discover the sysfs file path

kubectl get credentialbindings.core.riptides.io -n riptides-system vault-openai-apikeys-binding -o yamlThis command shows the resolved credential binding, including the exact sysfs file path where the OpenAI API key is exposed.

Example output

status:

state: OK

sysfs:

files:

- path: /sys/module/riptides/credentials/2e08019a-a435-55e3-9ba7-f01c09b9fc2b/crb-vault-openai-apikeys-binding/vault.json

type: CREDENTIALThe file path shown above is created and managed by the Riptides Linux kernel module. Only the authorized workload can read its contents.

Contents of the sysfs credential file

{

"api_key": "sk-svcacct-V8rgR1rZ-MVmeVUR-....",

"api_key_id": "key_1Nj...",

"service_account": "vault-my-role-...",

"service_account_id": "user-gLNLYNq....."

}This file contains the short‑lived OpenAI API key and associated metadata. The key exists only for its configured TTL and is revoked automatically by Vault/OpenBao.

Validate the API key

curl https://api.openai.com/v1/models \

-H "Authorization: Bearer <api_key from vault.json>" \

-H "OpenAI-Organization: <ORGANIZATION ID>" \

-H "OpenAI-Project: <PROJECT ID>"Output:

{

"object": "list",

"data": [

{

"id": "gpt-4-0613",

"object": "model",

"created": 1686588896,

"owned_by": "openai"

},

{

"id": "gpt-4",

"object": "model",

"created": 1687882411,

"owned_by": "openai"

},

{

"id": "gpt-3.5-turbo",

"object": "model",

"created": 1677610602,

"owned_by": "openai"

},

{

"id": "gpt-5.2-codex",

"object": "model",

"created": 1766164985,

"owned_by": "system"

},

{

"id": "gpt-4o-mini-tts-2025-12-15",

"object": "model",

"created": 1765610837,

"owned_by": "system"

},

{

"id": "gpt-realtime-mini-2025-12-15",

"object": "model",

"created": 1765612007,

"owned_by": "system"

},

{

"id": "gpt-audio-mini-2025-12-15",

"object": "model",

"created": 1765760008,

"owned_by": "system"

},

{

"id": "chatgpt-image-latest",

"object": "model",

"created": 1765925279,

"owned_by": "system"

}

...

...

]

}

This request demonstrates that the API key retrieved via Riptides and Vault/OpenBao works as expected.

What the AI agent needs to do

The AI agent simply reads the api_key field from the sysfs file and uses it when calling the OpenAI API.

- No Vault/OpenBao integration is required

- No long‑lived secrets are present on the host

- Access is enforced at the Linux kernel level

This provides a simple, secure, and auditable way for AI agents to consume GenAI APIs at scale.

Why it matters

As AI agents become a foundational building block of modern systems, credential handling must evolve.

Riptides enables an operating model where:

- AI agents never hold long-lived secrets

- OpenAI API keys are issued just in time and revoked automatically

- Identity is the primary security primitive

- Security improves as systems scale, rather than degrading

This approach:

- Reduces blast radius

- Simplifies development and operations

- Aligns with zero-trust and least-privilege principles

- Makes large-scale agentic systems operationally sustainable

In an ecosystem rapidly moving toward autonomous workloads, identity-first, short-lived credentials are no longer optional — they are essential.

Riptides brings this model to GenAI workloads today, starting with OpenAI and extending naturally to the broader AI platform landscape.

In this post, we showed how short-lived OpenAI API keys can be securely delivered to AI agents using identity and kernel-level enforcement. In the next post, we’ll go one step further and show how Riptides can inject these keys directly into outbound requests, so workloads never read or handle API keys at all.

If you enjoyed this post, follow us on LinkedIn and X for more updates. If you'd like to see Riptides in action, get in touch with us for a demo.